Frozen Backpropagation: Relaxing Weight Symmetry in Temporally-Coded Deep Spiking Neural Networks

Published in arXiv, 2025

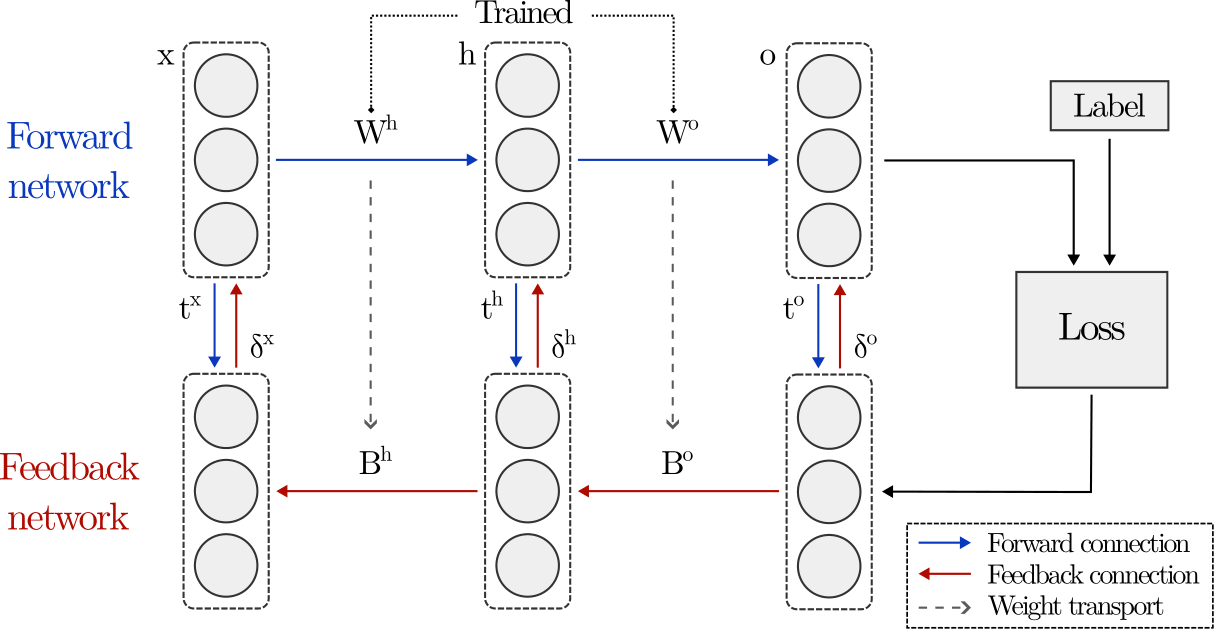

In this paper, we consider a dual-network configuration, using separate networks with distinct weights for the forward and backward passes. This configuration is relevant for implementation on neuromorphic hardware but poses challenges for BP-based training, as correct credit assignment requires exact symmetry between forward and feedback weights throughout training. We introduce the Frozen Backpropagation (FBP) algorithm to relax the weight symmetry requirement without compromising performance, enabling a more efficient implementation of BP-based training on neuromorphic hardware.

Recommended citation: Gaspard Goupy, Pierre Tirilly, and Ioan Marius Bilasco. Frozen Backpropagation: Relaxing Weight Symmetry in Temporally-Coded Deep Spiking Neural Networks. arXiv, arXiv:2505.13741, 2025.